Generative Adversarial Networks (GANs)

The following contains my notes for my deep dive into the math behind simple GANs, which were proposed in the following paper [1].

Preliminaries and Definitions

- Generative Model: Given a training set sampled from a distribution $p_{\text{data}}(x)$, a generative model attempts to estimate $p_{\text{data}}(x)$. The distribution of the generative model is denoted by $p_{\text{model}}(x)$, and $p_{\text{model}}(x)$ can used to generate new (or similar) samples from $p_\text{data}(x)$.

- Zero-Sum Game: A game where the costs of all players equals zero. It’s also called a minimax game.

- Convex set: A set $\mathcal{S}$ is a convex set if for any two members $x_1, x_2 \in \mathcal{S}$ and scalar $\alpha \in [0,1]$, the convex combination defined by $\alpha x_1 + (1-\alpha) x_2 \in S$.

- Convex function: If $f$ is a convex function, then for all $\alpha \in [0,1]$ and $x_1, x_2 \in \mathcal{S}$, where $\mathcal{S}$ is a convex set, the following is true $f(\alpha x_1 + (1-\alpha) x_2) \leq \alpha f(x_1) + (1-\alpha) f(x_2)$.

- Iterated Expectation: For two random variables $X,Y$ , the following holds $\mathbb{E}[g(X,Y)] = \mathbb{E}_{Y}[ \mathbb{E}_{X|Y} [g(X,Y)] ]$

- Kullback–Leibler (KL) divergence: Measures the difference between two distributions $p(x)$ and $q(x)$. It is computed as follows: \(\text{KL}(p||q) = - \int p(x) \log\bigg(\frac{q(x)}{p(x)}\bigg) dx = - \mathbb{E}_{p(x)} \log\bigg(\frac{q(x)}{p(x)}\bigg)\). The KL divergence is not symmetric $\text{KL}(p||q) \neq \text{KL}(q||p)$ and the measure is non-negative $\text{KL}(p||q) \geq 0$ and it achieves equality if and only if $p(x) = q(x)$. The KL divergence is not considered a metric in the traditional sense.

Overview of Generative Models

Generative models fall into two categories: Explicit and Implicit

Explicit Models

Many generative models are trained using maximum likelihood estimation (MLE) with an explicit density function. In particular, the model distribution $p_{\text{model}}$ is selected from a specific parametric family of distributions with parameters $\theta$ (e.g. Gaussian, Bernoulli). Given a model distribution $p_{\text{model}}(x;\theta)$ with parameters $\theta$ and an i.i.d dataset $\{x_i\}_{i=1}^N$ sampled from $p_{\text{data}}(x)$, the likelihood of the data under the given model is given by $\prod_{i=1}^N p_{\text{model}}(x_i;\theta)$. ML estimation involves finding the parameters $\theta$ that maximize the likelihood of the data under the given model:

\[\theta^* = \text{argmax}_\theta \prod_{i=1}^N p_{\text{model}}(x_i;\theta)\]Since $0 < a < b \Leftrightarrow \log a < \log b $,

\[\theta^* = \text{argmax}_\theta \sum_{i=1}^N \log p_{\text{model}}(x_i;\theta)\]Maximizing the ML is equivalent to minimizing the KL divergence between the $p_{\text{data}}(x)$ and $p_{\text{model}}(x;\theta)$:

\[\text{argmax}_\theta \sum_{i=1}^N \log p_{\text{model}}(x_i;\theta)\] \[\text{argmin}_\theta -\frac{1}{N} \sum_{i=1}^N \log p_{\text{model}}(x_i;\theta)\] \[\text{argmin}_\theta -\frac{1}{N} \sum_{i=1}^N [\log p_{\text{model}}(x_i;\theta) - \log p_{\text{data}}(x)]\]Using the law of large numbers:

\[\text{argmin}_\theta -\mathbb{E}_{p_{\text{data}}(x)}[\log p_{\text{model}}(x_i;\theta) - \log p_{\text{data}}(x)]\] \[\text{argmin}_\theta -\mathbb{E}_{p_{\text{data}}(x)}\bigg[\log \frac{ p_{\text{model}}(x_i;\theta)}{ p_{\text{data}}(x)}\bigg]\] \[\text{argmin}_\theta \text{KL}(p _{\text{data}} || p _{\text{model}})\]In essence, the MLE attempts to find the distribution in the parametric family $p_{\text{model}}(x;\theta)$ that is the closest to $p_{\text{data}}(x)$. As suggested in [2], sometimes tractable explicit densities impose design limitations, so explicit models with intractabilities are used instead. Explicit models with intractabilities often require variational approximations (Variational Autoencoders) or Markov chain approximations (Restricted Boltzmann Machines).

Implicit models

Implicit generative methods rely on the ability to sample from $p_\text{model}$ rather than the ability to evaluate or approximate it. An implicit generative model samples from a latent variable from the distribution $p(z)$ where $z \in \mathcal{Z} \subseteq \mathbb{R}^m$, and uses a deterministic function $G: \mathcal{Z} \mapsto \mathcal{X}$, in order to map the latent variable $z$ to $x \in \mathcal{X} \subseteq \mathbb{R}^n$. The generation process is shown below as graphical model:

The distribution induced by the transform $G$ is given by:

\[p_g(x) = \frac{\partial}{\partial x_1} \cdots \frac{\partial}{\partial x_n} \int_{G(z) \leq x} p(z) dz\]Note, $p_g$ is the same as the model distribution, $p_{\text{model}}$. Typically, the function $G$ is parameterized with a set of parameters $\theta$. In the deep learning setting, $G_\theta$ is a neural network model and $\theta$ are the network parameters. In order to perform MLE on $p_g$, it would require the evaluation of the integral and derivatives of above which is made difficult due to [3]:

- Regions of integration $\{G(z) \leq x\}$ are difficult to determine.

- Computing the integral is impossible even if the regions are known.

- The derivatives are high dimensional and difficult to compute.

Computing $p_g$ when $G_\theta$ is a neural network is intractable for the reasons given above, but another reason for the inability to use MLE results from inability to provide a variational approximation or Markov Chain approximation. Lastly, the GAN training framework provides a method for fitting an implicit generative model. See [2, 3, 4, 13] for more information on implicit models.

GANs

Setup

Generative Adversarial Networks (GANs) provide a framework for fitting implicit generative models using an adversarial two-player game. A GAN consists of two neural networks: Generator ($G$) and Discriminator ($D$) with parameters $\theta_g$ and $\theta_d$ respectively. The generator network is the deterministic function that attempts to map the input latent random vector with distribution $p(z)$ (sometimes called a noise vector) to the desired data distribution (called the real data distribution). The distribution given by the generator is denoted by $x = G(z) \sim p_g(x)$. The discriminator network has the task of determining the whether the data presented to it came from the generator network’s output distribution or the real data distribution. The discriminator outputs a probability that indicates it’s belief that the input came from the real data distribution. In essence, if $x \sim p_{\text{data}}(x)$, then $D(x) \rightarrow 1$ and if $x \sim p_{g}(x)$, then $D(x) \rightarrow 0$. The generator network wins the game when the discriminator network is reduced to random guessing (e.g $D = 0.5$ for all input). A useful analogy from the original paper [1] describes the adversarial training process as: “ The generative model can be thought of as analogous to a team of counterfeiters, trying to produce fake currency and use it without detection, while the discriminative model is analogous to the police, trying to detect the counterfeit currency”. Ultimately, the problem is cast a two player minimax game:

\[\text{min}_G \text{max}_D \mathbb{E}_{z \sim p(z)} [\log (1-D(G(z))] + \mathbb{E}_{x \sim p_{\text{data}}(x)}[\log D(x)]\]Developing the GAN Loss

Suppose you need to build a discriminative classifier $P(y|x)$ between real and fake examples. Let $y \sim \text{Bern}(q)$ be a Bernoulli binary random variable, where $y=1$ denotes a real sample and $y=0$ denotes a fake or generator sample. Let’s assume $p(x|y) = p_{\text{data}}(x)^y p_{g}(x)^{1-y}$. We will find the optimal classifier by minimizing the cross entropy [12]:

\[\text{min}_{P(y|x)}-\mathbb{E}_{x,y}[\log p(y|x)]\]By iterated expectation:

\[\text{max}_{P(y|x)}(1-q)\mathbb{E}_{x|y=0} [\log p(y=0|x)] + q \mathbb{E}_{x|y=1}[\log p(y=1|x)]\] \[\text{max}_{P(y=1|x)}(1-q)\mathbb{E}_{x|y=0} [\log (1-p(y=1|x))] + q \mathbb{E}_{x|y=1}[\log p(y=1|x)]\]Since for $x \sim p_{\text{data}}(x)$, $D(x) \rightarrow 1$ and for $x \sim p_{g}(x)$, $D(x) \rightarrow 0$, $D(x)$ behaves exactly like our discriminative classifier. Hence we set $P(y=1|x)=D(x)$.

\[\text{max}_{P(y=1|x)}(1-q)\mathbb{E}_{x|y=0} [\log (1-D(x))] + q \mathbb{E}_{x|y=1}[\log D(x)]\]Also, note that $p(x|y) = p_{\text{data}}(x)^y p_{g}(x)^{1-y}$.

\[\text{max}_D(1-q)\mathbb{E}_{p_{g}} [\log (1-D(x))] + q \mathbb{E}_{x\sim p_{\text{data}}}[\log D(x)]\]Since $G: \mathcal{Z} \mapsto \mathcal{X}$ and $z \sim p(z)$:

\[\text{max}_D (1-q)\mathbb{E}_{p(z)} [\log (1-D(G(z))] + q \mathbb{E}_{x\sim p_{\text{data}}}[\log D(x)]\]We select $q = 1/2$ (since we control the sampling of $y$):

\[\text{max}_D \frac{1}{2}\mathbb{E}_{p(z)} [\log (1-D(G(z))] + \frac{1}{2} \mathbb{E}_{x\sim p_{\text{data}}}[\log D(x)]\] \[\text{max}_D \mathbb{E}_{p(z)} [\log (1-D(G(z))] + \mathbb{E}_{x\sim p_{\text{data}}}[\log D(x)]\]In order to fool a fixed $D$, we need to find the $G$ that maximizes the loss:

\[\text{max}_G -\mathbb{E}_{x,y} P(y|x)\] \[\text{min}_G \mathbb{E}_{p(z)} [\log (1-D(G(z))] + \mathbb{E}_{x\sim p_{\text{data}}}[\log D(x)]\] \[\text{min}_G \mathbb{E}_{p(z)} \log (1-D(G(z))]\]Since we want to fool the optimal $D^* = \text{argmax}_D \mathbb{E}_{z \sim p(z)} [\log (1-D(G(z))] + \mathbb{E}_{x \sim p_{\text{data}}(x)}[\log D(x)]$, the overall problem becomes:

\[\text{min}_G \text{max}_D \mathbb{E}_{p(z)\sim z} [\log (1-D(G(z))] + \mathbb{E}_{x \sim p_{\text{data}}(x)}[\log D(x)]\]A similar derivation is developed in [3].

Finding the optimal $D$

In both proofs, we assume that the discriminator is not defined outside the union of the support of $p_\text{data}$ and the support of $p_g$. Two methods of finding the optimal discriminator are presented: the log trick method and a calculus of variations method.

Using the Log Trick

Assume a fixed $G$,

\[\text{max}_D \mathbb{E}_{p(z)} [\log (1-D(G(z))] + \mathbb{E}_{x\sim p_{\text{data}}}[\log D(x)]\] \[\text{max}_D \int p(z) \log (1-D(G(z)) dz + \int p_{\text{data}}(x)\log D(x) dx\] \[\text{max}_D\int p_g(x) \log (1-D(x)) + p_{\text{data}}(x)\log D(x) dx\] \[\text{max}_D\int p_g(x) \log (1-D(x)) + p_{\text{data}}(x)\log D(x) dx \leq\] \[\int \text{max}_{D(x)} [p_g(x) \log (1-D(x)) + p_{\text{data}}(x)\log D(x)] dx\]Using the fact, that for any $(a,b) \in \mathbb{R}^2 \setminus {0, 0} $, the function $y \rightarrow a \log (y) + b \log (1-y)$ achieves its maximum in $[0,1]$ at $\frac{a}{a+b}$. The upper bound is met with equality for the following discriminator:

\[D^*(x) = \frac{p_{\text{data}}(x)}{p_{\text{data}}(x)+ p_g(x)}\]Using Calculus of Variations

Assume a fixed $G$,

\[\text{max}_D \mathbb{E}_{p(z)} [\log (1-D(G(z))] + \mathbb{E}_{x\sim p_{\text{data}}}[\log D(x)]\] \[\int p(z) \log (1-D(G(z)) dz + \int p_{\text{data}}(x)\log D(x) dx\] \[F[D] = \int p_g(x) \log (1-D(x)) + p_{\text{data}}(x)\log D(x) dx\]The functional $F$ has a stationary point at the function $D^*(x)$ that satisfies the Euler-Lagrange equation:

\[\frac{d}{dD} [p_g(x) \log (1-D(x)) + p_{\text{data}}(x)\log D(x)]\bigg|_{D=D^*} = 0\] \[\frac{p_{\text{data}}(x)}{D^*(x)} - \frac{p_g(x)}{1-D^*(x)}= 0\] \[D^*(x) = \frac{p_{\text{data}}(x)}{p_{\text{data}}(x)+p_g(x)}\]Since $-F[D]$ is convex in $D$, $D^*$ is the global optimal discriminator. For more resources on calculus of variations, see [5, 9] .

Conditions for the optimal $G$

Given the optimal discriminator $D^*$:

\[G^* = \text{min}_G \mathbb{E}_{p_g} [\log \frac{p_g(x)}{p_{\text{data}}(x)+p_g(x)}] + \mathbb{E}_{x\sim p_{\text{data}}}[\log \frac{p_{\text{data}}(x)}{p_{\text{data}}(x)+ p_g(x)} ]\] \[G^* = \text{min}_G \mathbb{E}_{p_g} [\log \frac{2p_g(x)}{p_{\text{data}}(x)+p_g(x)}] + \mathbb{E}_{x\sim p_{\text{data}}}[\log \frac{2p_{\text{data}}(x)}{p_{\text{data}}(x)+ p_g(x)} ] - \log 4\]Using the definition of KL divergence:

\[G^* = \min_G KL(p_g|| \frac{p_g+p_{\text{data}}}{2}) + KL(p_{\text{data}}||\frac{p_g+p_{\text{data}}}{2}) - \log 4\]Using the definition of Jensen Shannon Divergence:

\[G^* = \min_G 2JSD(p_g || p_{\text{data}}) - \log 4\]Therefore, the optimal $G^*$ requires that $p_g = p_{\text{data}}$ and it achieves a loss value of $-\log 4$ under the loss above. Since, the loss is convex in $p_g$ therefore $p_{\text{data}}$ is the unique global minimizer. Also, when $p_g = p_{\text{data}}$, $D^* = \frac{1}{2}$ which implies the performance of $D^*$ is equal to random guessing.

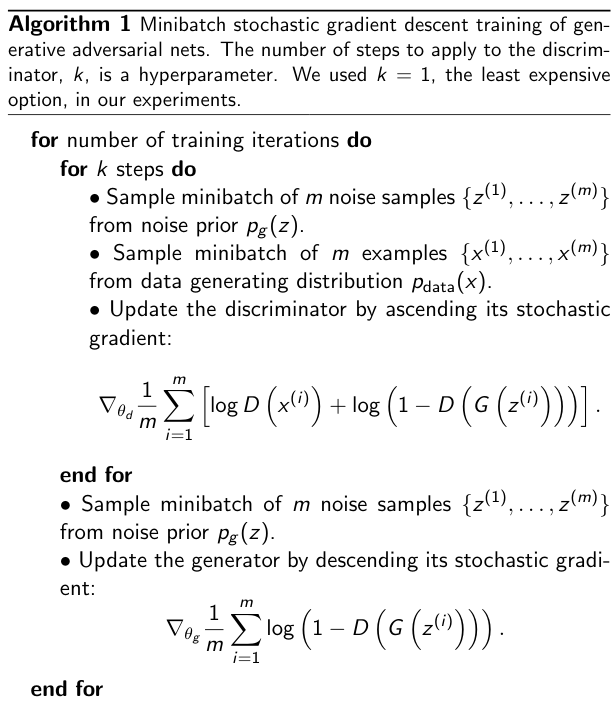

Training

GANs use a gradient ascent-descent algorithm in order to train $D$ and $G$. The algorithm is presented below:

Loss Functions

The objectives below are optimized at each step of the gradient ascent-descent:

Discriminator Objective:

\[\max_{\theta_d} \mathbb{E}_{p(z)} [\log(1-D(G(z;\theta_g));\theta_d)] + \mathbb{E}_{p_{\text{data}}(x)} [\log D(x;\theta_d)]\]Generator Objective:

\[\min_{\theta_g} \mathbb{E}_{p(z)} [\log(1-D(G(z;\theta_g));\theta_d)]\]Loss Function - Non-Saturating Heuristic

Generator loss gradient saturates too quickly at the beginning of training because the discriminator can easily differentiate between the real and fake samples. The original GAN paper [1] suggests replacing the generator’s loss $\min \log(1-D(G(z)))$ with $\max \log D(G(z))$. $\max \log D(G(z))$ is referred to as the non-saturating loss.

Since $D = \sigma(\phi(G(z)))$ where $\sigma$ is a sigmoid and $\phi$ is the logit of the discriminator at $G(z)$

- $\frac{d}{d\phi} \bigg|_{\phi=\phi(G(z))} \log(1-\sigma(\phi)) = -\frac{\sigma(\phi(G(z))^\prime}{1-\sigma(\phi(G(z))} = -\sigma(\phi(G(z)))$

- Early in training $\sigma(\phi(G(z)) \rightarrow 0$ because the discriminator can easily differentiate between the real and fake samples.

- $\frac{d}{d\phi} \bigg|_{\phi=\phi(G(z))} \log(\sigma(\phi)) = 1-\sigma(\phi(G(z))$

- The non-saturating loss doesn’t suffer from a small gradient at the beginning of training since $1-\sigma(\phi(G(z))$ will be close to $1$ during the beginning of training.

Toy Example

Setup

In the toy problem, the $p_{\text{data}}$ data distribution equals a Gaussian distribution $\mathcal{N}(3,1)$. We will assume the generator is using the following noise prior $z\sim\mathcal{N}(0,1)$ and the generator has the following form $G(z;b) = z + b$. The discriminator has following form $D(x;[w,c]) = \sigma(wx + c)$ where $\sigma$ is a sigmoid. Here we initialize $b=0$ and $c=0$ and $w$ is drawn from a $\mathcal{N}(0,1)$. The video below tracks the discriminator and the output distribution of the generator throughout the training process:

Check out the Colab notebook below to explore how to train the toy GAN:

As you can see, the final discriminator converges to roughly $\frac{1}{2}$ (random guessing) when the generator started create samples similar to $p_{\text{data}}$ (e.g. the two histograms overlapped).

Evaluation Metrics

Evaluation metrics are still an open research problem [4].

Parzen Density Estimation

Parzen Density Estimation was initially considered for evaluating GANs in [1]. Parzen Density Estimation using a Gaussian Kernel with bandwidth $\sigma$:

\[p_n(x) = \frac{1}{n}\sum_{i=1}^n \frac{1}{(2\pi\sigma^2)^{d/2}} \bigg[ \exp{\frac{1}{2\sigma^2}||x-x_i||^2} \bigg]\]where $x_i \in \mathbb{R}^d \sim p_g$ and $\sigma$ is determined using cross-validation.

- The Parzen Density estimate attempts to estimate $p_g$ using samples generated by the generator network.

- The Parzen Density estimate is used to evaluate the likelihood of the test data under the estimated $p_g$.

- Parzen density estimates tend to perform poorly in high dimensional spaces.

Inception Score (IS)

The Inception Score was proposed in [6], and it is defined as follows:

\[\text{IS} = \exp(\mathbb{E}_{x\sim p_g} KL(p(y|x)||p(y))\]Both $p(y|x)$ and $p(y)= \int p(y|x)p_g(x) dx \approx \frac{1}{n}\sum_i p(y|x_i)$ are estimated using an Inception V3 pre-trained on ImageNet .

- $p(y|x)$ should be low entropy if the objects produce a clear and yield confident classifications.

- $p(y)$ generated samples should have high diversity and therefore high entropy.

A higher IS implies better generative quality. IS has some drawbacks such a sensitivity to the Inception weights used, fails to detect memorization, and the need to estimate $p(y)$. See [7] for a detailed discussion on the IS.

Fréchet Inception Distance

Fréchet Inception Distance [8,10]:

\[\text{FID} = ||\mu_{data} - \mu_{g}||_2^2 + \text{Tr}(\Sigma_{data} + \Sigma_{g} - 2 (\Sigma_{data} \Sigma_{g})^{1/2})\]$\mu$ and $\Sigma$ represent the mean and covariance. The FID uses the last pooling layer of Inception v3 in computing $\mu$ and $\Sigma$. A smaller FID score implies better performance.

- Assumes that the embeddings provided for the real and generated data follow a multivariate Gaussian.

- FID score can detect intra-class mode dropping (e.g. producing one image for each class) [11].

- Some drawbacks include sensitivity to the Inception weights used and fails to detect memorization.

References:

- Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, BingXu, David Warde-Farley, Sherjil Ozair, Aaron Courville,and Yoshua Bengio, Generative adversarial nets, Advances in neural information processing systems, 2014,pp. 2672–2680

- Ian Goodfellow, Nips 2016 tutorial: Generative adversarial networks, arXiv preprint arXiv:1701.00160 (2016)

- Mohamed, Shakir, and Balaji Lakshminarayanan. “Learning in implicit generative models.” arXiv preprint arXiv:1610.03483 (2016).

- Pieter Abbeel’s CS294-158-SP20 Deep Unsupervised Learning Spring 2020 Notes

- Rockafellar, R. T. “Convex analysis in the calculus of variations.” Advances in Convex Analysis and Global Optimization. Springer, Boston, MA, 2001. 135-151.

- Salimans, Tim, et al. “Improved techniques for training gans.” Advances in neural information processing systems. 2016.

- Barratt, Shane, and Rishi Sharma. “A note on the inception score.” arXiv preprint arXiv:1801.01973 (2018).

- Heusel, Martin, et al. “Gans trained by a two time-scale update rule converge to a local nash equilibrium.” Advances in neural information processing systems. 2017.

- Christopher M Bishop,Pattern recognition and Machine Learning, Springer, 2006

- Dowson, D. C., and B. V. Landau. “The Fréchet distance between multivariate normal distributions.” Journal of multivariate analysis 12.3 (1982): 450-455.

- Mario Lucic, Karol Kurach, Marcin Michalski, Sylvain Gelly, and Olivier Bousquet, Are gans created equal? A large-scale study, Advances in neural information processing systems, 2018, pp. 700–709

- A. Buja, W. Stuetzle, and Y. Shen. Loss functions for binaryclass probability estimation and classification: Structure and applications.Working draft, November, 2005

- Li, Ke, and Jitendra Malik. “Implicit maximum likelihood estimation.” arXiv preprint arXiv:1809.09087 (2018).

If you find any errors or have useful input, feel free to comment below or message me.